🇬🇧 Applying Angular Runtime Configurations in Dockerized Environments | Hacker Noon

With the shift to Cloud-first and the rise of managed infrastructure and orchestrations such as EWS, Azure AKS or GCP clusters the application landscape needs to prepare and adjust itself to match newly rising requirements. This is for such nothing new nor unknown, but acknowledging this fact is one and probably the first important step.

Outside-In Deep Dive

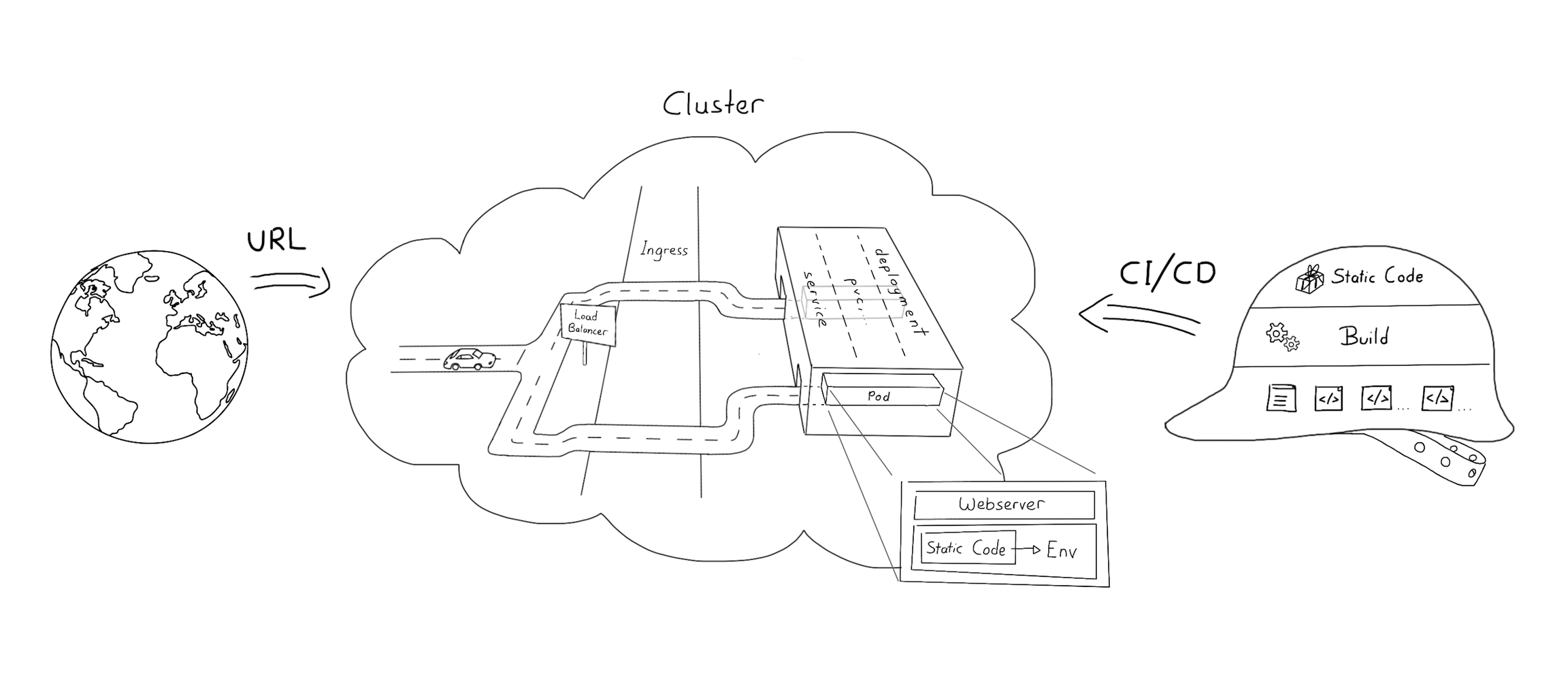

Let’s break the technical architecture down by starting from the user perspective. How does the technical setup under the hood look like when a user visits an application orchestrated by Kubernetes from the point onwards where the application's URL is entered to the browser?

Graphic by https://www.linkedin.com/in/theresa-ohm-06b7a156

The first configuration to kick in is the DNS config mapping the URL to an IP address, in these scenarios the IP of a load balancer. The load balancer having close to no other job that fulfilling the name, it redirects the user’s request to the orchestration where a web server is listening. As we do operate kubernetes environments we will limit ourselves in here, by specifying this web server as the ingress controller of the kubernetes cluster. There are multiple types of ingress controllers. We decided to go with a default option using an nginx controller.

As a side node, this layer is subject to be the first layer where an application specific configuration may be required, f.e. when the application shall transmit larger data (proxy-body-sizes) and/or support long-lasting operations (proxy-timeouts), since the nginx ingress controller has implicit default configurations.

Underneath the ingress controller, the orchestration takes place as via a deployment the kubernetes pod(s) are serving the application container. Here the deployment can be configured to specify the environment the container should be served in. We again took an explicit design decision talking about the containers.

Our containers are docker images that do not only include the built angular application (static javascript), but a nginx web server as well, since we want to be able to run the docker container independently from the infrastructure and it’s orchestration, f.e. for development and/or testing purposes. We went for this approach and prioritized flexibility and comfort over performance. The docker image is built completely normal, using a multi-stage build to compile the angular source code and bundle the output together with the configured nginx web server.

Inside-Out Deep Dive

Having described the above Outside-In perspective, let’s now concentrate on Angular and its default characteristics. The concepts of Angular do include environments, a config or definition intended to be used for these scenarios, where different configurations should be applied for the application depending on whether you develop locally or run it in production. The downside of the Angular concept is, that it is a build-time configuration only.

This means that when running an Angular application, it is at first compiled into static JavaScript and the environment configuration is fixed at compile time as well. Since there is no way to circumvent this static code, you cannot configure the application depending on the infrastructure it is operated on, unless you know and do it in advance.

How to change a configuration into a runtime-configuration

A first important step to redesign an Angular application as such that it pairs well with Docker and orchestration is, to change the environment strategy to a run time configuration one. This can be achieved by getting rid of the default concept of environments using a Ajax/XHR call to retrieve the configuration dynamically instead. This is in more detail well described by @juristr in https://juristr.com/blog/2018/01/ng-app-runtime-config/.

In short, we implement a config service, which is instantiated on the start of the application using the appResolver fetching the config via an XHR call. The resolver is registered to the appRoutes as a guard. The application’s modules can simple inject the config.

app.routes.ts

app-resolver.service.ts

config.service.ts

If you take a close look we go one step further in our approach, also formalizing the structure of the json keys to be fetched. This is for good reason and will turn out to be a very crucial step.

By adding a unique prefix to the json keys, we can leverage this key later to dynamically assign values to the keys sourced from the respective environments. For local development this setup is straightforward, as we can simply but the values into the config.json file directly.

Going a step further, wrapping the angular application in a docker container, we need to adjust the config.json based on the container environment. Therefore we add shell script as docker-entrypoint during which the config.json with values from the current environment is (re-)generated.

docker-entrypoint.sh

Dockerfile

We used jq and some basic scripting here, but there are of course dozens of ways to achieve the same. This approach does allow us to specify the angular config values as env variables in docker-compose as well as in the deployment manifest.

docker-compose.yml

deployment.yml

In the end we have accomplished to establish a flexible, yet consistent mechanism to manage angular configs/environments under different circumstances dynamically. So far we haven’t recognized any downsides of this approach!

References:

[1] "How can I use environment variables in Nginx.conf" - https://serverfault.com/questions/577370/how-can-i-use-environment-variables-in-nginx-conf

[2] "How to use environment variables to configure your Angular application without a rebuild" - https://www.jvandemo.com/how-to-use-environment-variables-to-configure-your-angular-application-without-a-rebuild/

Source : https://hackernoon.com/applying-angular-runtime-configurations-in-dockerized-environments-kr3a33pr

Mis à jour

Ce contenu vous a-t-il été utile ?